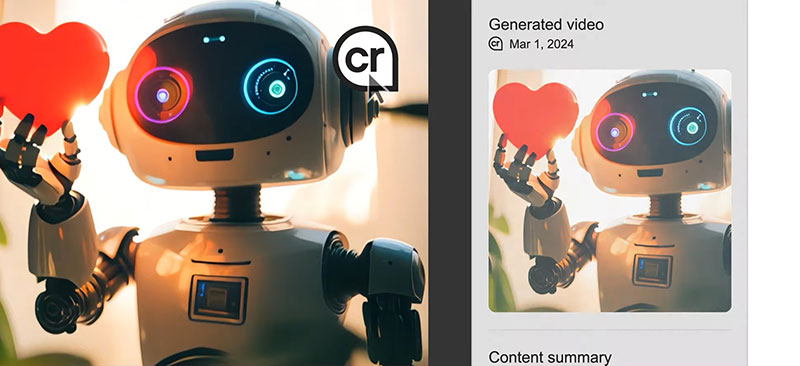

Adobe is continuing to expand on its Adobe Firefly software, designed for users who want to interact with and use AI models to develop ideas and concepts for content creation.

Generating mood boards with Firefly Boards

Adobe is continuing to expand on its Adobe Firefly software, designed for users who want to interact with and use AI models to develop ideas and concepts for content creation. Among a new series of Firefly updates is a mobile app bringing Firefly image and video generation and editing to users’ mobile phones.

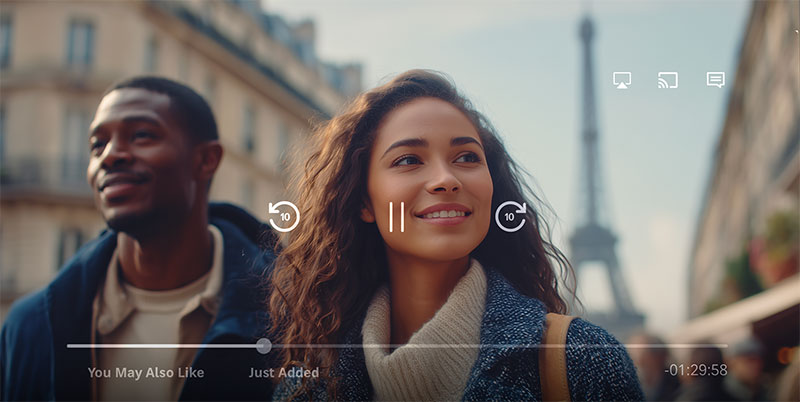

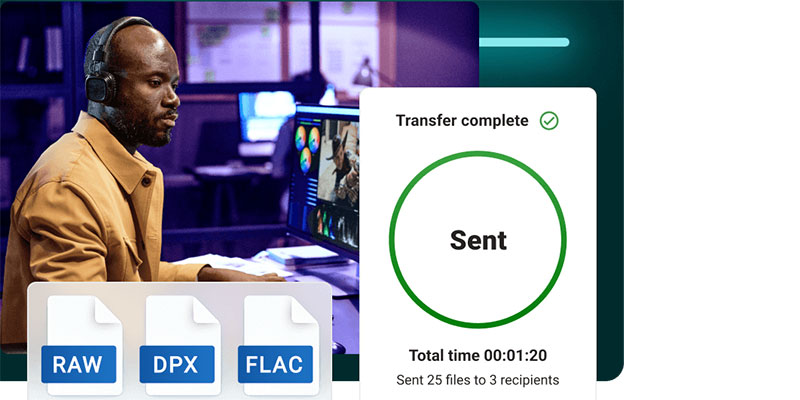

Firefly's web and mobile apps function as standalone tools and synchronise directly with Adobe Creative Cloud applications. Content created in Firefly is automatically synced with the user’s Creative Cloud account, which means an artist can start a project on the new mobile app, continue on the web and later move up to desktop software such as Photoshop and Premiere Pro. This approach supports project continuity and allows users to set up workflows that run from concept development to production

These workflows can be based on text prompts (Text to Image, Text to Video), begin with images that are then output as videos (Image to Video), add or remove objects (Generative Fill) and extend the size of an image by filling in the new sections with AI-generated content (Generative Expand).

Firefly-on-mobile adds to Adobe’s collection of mobile apps that already includes Photoshop, Lightroom and Adobe Express.

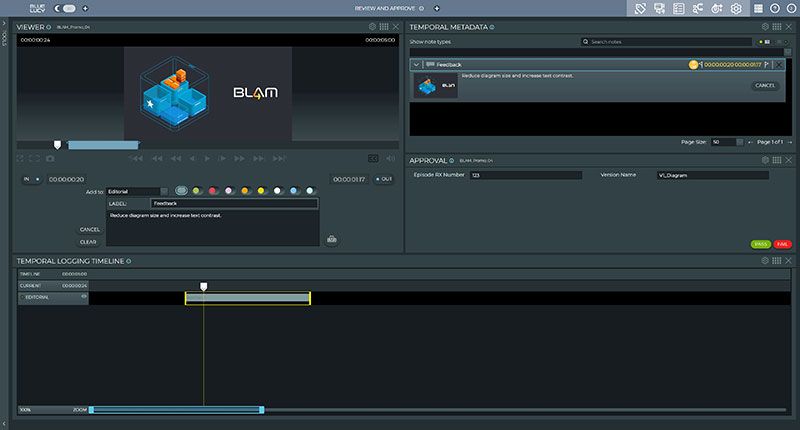

New Generative AI Models for Creative Cloud Users

Adobe has its own set of Firefly generative AI models, but Firefly users can also access OpenAI image generation, and Google Imagen 3 and 4 and Veo 2 and 3 models. This wider system of 3rd party generative AI models is growing. In another Firefly update, the system now includes models from Ideogram, Luma AI, Pika and Runway along with image and video models from Black Forest Labs. Having access to a variety of models gives users more flexibility to experiment and work with different aesthetic styles.

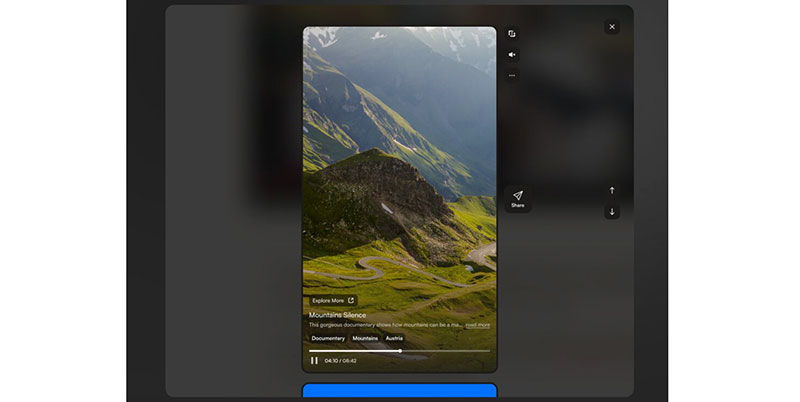

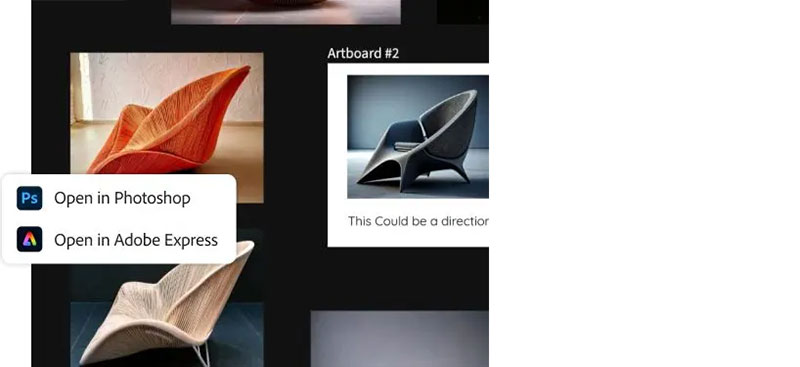

Creators can generate an image in the style or composition of an uploaded reference image using Style Reference and Structure Reference modes. Assets may be edited by expanding images and using a brush to remove objects or paint in new ones with Generative Expand, Generative Remove and Generative Fill options. Users can also experiment with different variations of their creation by mixing and matching models and transform generated images into a video — without leaving Firefly.

Other options are generating images based on a 3D scene with Scene to Image, now in beta, or producing editable vector-based artwork and design templates with natural language text prompts. Later in July 2025, it will be possible to generate avatars and sound effects from text prompts and sync audio and video clips using the sound of your voice.

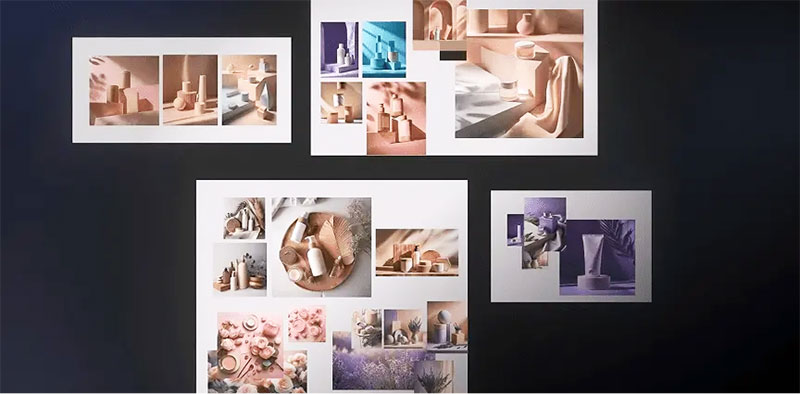

Firefly Mood Boards

Firefly Boards is an AI-assisted mood board application, available now in public beta on the web. It represents another way for creative teams to collaborate on concepts by adding video. Users can iterate between media types while using AI-powered video and image editing, and also remix uploaded video clips and generate new video footage using Adobe’s Firefly Video Model or with Google Veo 3, Luma AI’s Ray2 and Pika 2.2 text-to-video.

As well as generating images using Firefly models and partners’ models, creative teams can also use AI capabilities in Firefly Boards to make iterative edits to images from conversational text prompts using Black Forest Labs’ Flux.1 Kontext and OpenAI’s image generation capabilities, as mentioned above.

Note that the new models from partner companies are launching first in Firefly Boards, and will soon be accessible across the Firefly app. www.adobe.com